Editor’s Story - Keeping the AI Incidents Fresh

Want to make AI safer? Submit an incident report.

Want to become an Editor? Learn more in the Editor’s Guide

Want to follow incident developments? Subscribe to “The AI Incident Briefing”

At the Collab our first order of business is indexing the universe of AI incidents. Contributors and Editors are of utmost importance to this effort and central to the mission of ensuring the safety of AI deployments. Incident editors work collaboratively to determine whether real world events are "incidents" and require an incident profile on incidentdatabase.ai.

Having recently indexed the 500th distinct incident expressed across more than 2,500 reports, we want to celebrate AI Incident Database editors by highlighting their stories.

Daniel Atherton, Editor and Contributor

Volunteer at the Collab since June 2022

How did you get involved?

I was working with Patrick Hall of BNH on a lot of knowledge base work, including tracking and taxonomizing incidents. He recommended coordinating my work on that front with the AI Incident Database.

What’s the biggest challenge with editing?

Determining the specifics can be challenging, such as establishing the precise chronology of events, who exactly deployed the technology, and who precisely the harmed (or nearly harmed) parties were. Some reports might cite one example of a harmed party while referring to a variety of other harmed parties without going into the specifics. That can result in a lot of research to establish the full picture.

What’s an incident you find particularly interesting?

Incidents 112, 255, 256, 257, 429, and 446 in the Database all pertain to ShotSpotter, which law enforcement agencies in tandem with municipalities use in order to try to identify and locate gunfire. I've been interested in mapping the complicated dimensions of the reporting on this technology and its deployment.

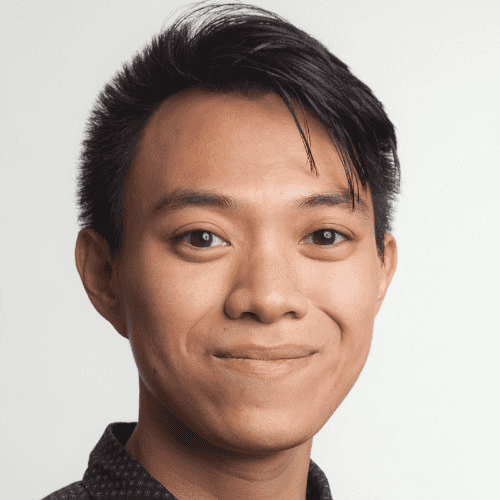

Khoa Lam, Senior Editor

Staff at the Collab since May 2022

How did you get involved?

Two years ago, when I learned about the incident database, it struck me as a great tool for learning about the harms and failures of algorithmic systems. As a way to familiarize myself with the incidents as a data vis nerd, I made an interactive timeline showcasing the incidents over time. Eventually, when the database was looking for an editor, I reached out and applied for the role, as I wanted to understand the incidents at a deeper level, and I wanted to contribute to the curation and quality management of the incident data.

What’s the biggest challenge with editing?

The most important challenge to me is determining whether something is an incident at all. Of course, editors of the database have a guide to operationalize this question, but incidents “in the wild” occasionally resist fitting into our neatly defined box. I find these cases both humbling and inspiring, as they remind us that incidents involving AI, much similar to AI systems, are shaped by humans and reflect our perspectives, values, and biases.

What’s an incident you find particularly interesting?

I find certain incidents featuring out-of-purpose AI use both fascinating and harrowing. One exemplifying case is incident 518, where a NYC detective was reportedly using the face of Woody Harrelson (an American actor) as a replacement for a suspect’s face in a facial recognition search that resulted in an arrest. The detective had used the suspect’s face, but had not received useful matches as the surveillance footage containing his face was highly pixelated. Noticing the resemblance between the suspect’s face and that of Woody Harrelson, and not accepting the limitations of the AI tool, the detective proceeded to use the AI tool which led to the false accusation of an innocent victim.

This incident, to me, highlights an urgent need for much better governance, explainability, monitoring, and accountability of AI use, especially for such a high-stakes, public-affecting application. I find this incident fascinating as it challenges existing knowledge and mitigation strategies around automation and confirmation biases, which typically do not account for efforts of users to circumvent known limits of their AI tools.

Kate Perkins, Editor and Contributor

Volunteer at the Collab since June 2021, Editor since November 2022

How did you get involved?

I first learned about the AI Incident Database (AIID) through a WIRED article titled “Don’t End Up on This Artificial Intelligence Hall of Shame” in June of 2021. As I work on Responsible AI, the AIID goal to bring awareness to past AI harms with the hope of learning from these experiences “so we can prevent or mitigate bad outcomes” really spoke to me. I reached out to Sean McGregor to learn more and immediately began submitting incident reports I came across. As I learned more about the submission process, I was able to start contributing more to the AIID as an editor.

What’s the biggest challenge with editing?

For new incidents, it can be time consuming to do the research to find the first date of the AI system harm and other key information such as the developer of the AI system. When full submissions are entered into the database, it reduces the work an editor needs to put in to ingest a new report. I’ve also come across articles that highlight multiple AI harms, in which case we can map this report to multiple incidents. The Editor’s Note field in the submission form is a great place to add any extra detail about the incident(s) being submitted.

What’s an incident you find particularly interesting?

When sharing information about the AIID, I frequently reference incident 6 and incident 106 about the Microsoft Tay and Scatter Labs Lee Luda chat bots. Both of the incidents highlight the same AI harm that comes from training chatbots on data that includes inappropriate language and conversation. I hope we have collectively learned from these experiences and don’t see a 3rd chatbot end up in the AIID with the same harm.

There are also a lot of incidents emerging around generative AI systems as we see these technologies scale and be adopted in new use cases. My hope is that we quickly learn from the harms associated with generative AI systems so that we can responsibly leverage this emerging technology.

However, the one incident that has really stuck with me is incident 384 in which Sebastian Galassi, a Glovo driver in Italy, was fired by an AI algorithm due to non-compliance after the employee was killed in a car accident while making a delivery on Glovo’s behalf. This incident highlights the real world harms that can come from AI systems used to layoff workers. If there had been a human-in-the-loop, they could have recognized the reason the Glovo delivery was incomplete and handled the situation with more compassion and empathy. In this incident, the AI system was insensitive to the tragic incident that occurred and caused further harm to Galassi and their family. A key learning I took away from this incident is that AI used in performance management systems can benefit from having human oversight to avoid dehumanizing effects.

Janet Schwartz, Editor

Staff at the Collab since June 2022

How did you get involved?

I had met (founder) Sean McGregor some years ago through a mutual friend and had discussed our common interest in big data at the time. Last year I found out that Sean was founding the Responsible AI Collaborative to support the AIID, and so I asked if I could be a part of the team. My background was in IT, but I only had a layman’s understanding of machine learning or AI. The last year has been an incredible journey into the field and I have so enjoyed helping to grow the AIID and its contributor base.

What’s the biggest challenge with editing?

The biggest challenge for me has been dealing with reports that are one element of a bigger story. This can require digging up related articles to determine the root cause(s), original incident date, and involved actors. However, these are some of the more interesting reports to dive into since it requires research and critical thinking to determine these answers.

What’s an incident you find particularly interesting?

Several incidents and issue reports have recently caught my attention because they have potentially serious ramifications for ID verification. In incident 485, a UK journalist used an AI generated version of his voice to successfully access his bank account and in incident 523 an Australian journalist used a similar method to gain access to his Centrelink account. In issue report 2962, a cybersecurity firm ran tests with the AI system PassGAN to demonstrate how quickly passwords can be cracked. (Issue reports can be thought of as “pre-incidents”, or warnings about harms that are likely to be realized in the near future.)

This points to a general need to reconsider the common methods of verification used by a huge number of organizations.

Want to become an AI Incident Editor? Half of the editors active in the AI Incident Database hold their positions because they submitted a large number of high quality incidents. You can follow the same path by regularly submitting incidents meeting the full editorial standards, then either ask or be invited to join the panel. Without help from people like you, we will inevitably fail to learn the lessons of the past. Please submit incidents!